DevOps

Wednesday, 10 May 2023

Friday, 24 March 2023

Minikube Installation on ubuntu 20.04

Minikube installation

========================

1:Install kubectl binary with curl on Linux

curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl"

2:Validate the binary (optional)

curl -LO "https://dl.k8s.io/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl.sha256"

3:Install kubectl

sudo install -o root -g root -m 0755 kubectl /usr/local/bin/kubectl

4:Docker instalation

sudo apt update

sudo apt install apt-transport-https ca-certificates curl software-properties-common

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

sudo add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu bionic stable"

sudo apt update

apt-cache policy docker-ce

sudo apt install docker-ce

sudo systemctl status docker

sudo groupadd docker

sudo usermod -aG docker $USER

newgrp docker

docker run hello-world ---to check

================================================================

Minikube install

=======================

curl -LO https://storage.googleapis.com/minikube/releases/latest/minikube-linux-amd64

sudo install minikube-linux-amd64 /usr/local/bin/minikube

minikube start

kubectl get nodes

kubectl get ns

Sunday, 19 March 2023

sonarqube

SonarQube — An Easy Set-up

SonarQube is a Free and Open-Source Code Quality Platform.

cpu=2 core

ram=4 gb

The installation of SonarQube might seem a bit hectic for newbies. This is a guide to easily setup and test your code’s quality with SonarQube in less than 30 mins.

The easiest way to set up SonarQube is via a docker image. First you have to make sure that docker is installed on your machine.

docker --versionRun the SonarQube docker container with the following command:

docker run -d --name sonarqube -p 9000:9000 sonarqubeOnce the container has started, go to http://localhost:9000 (if you are running on a vm replace localhost with the IP address ) on your browser.

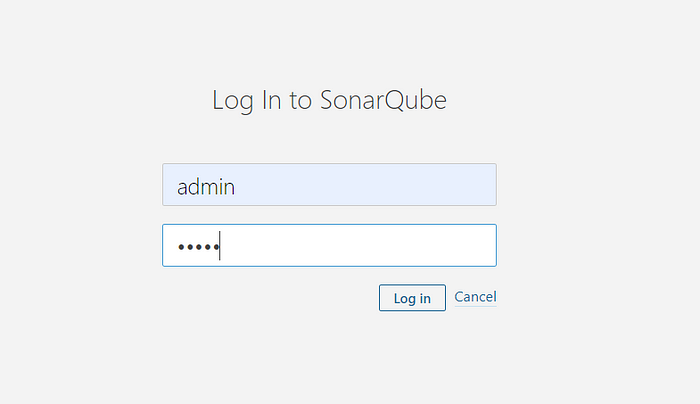

When the SonarQube page is loaded, login with the following credentials.

Username: admin

Password: admin

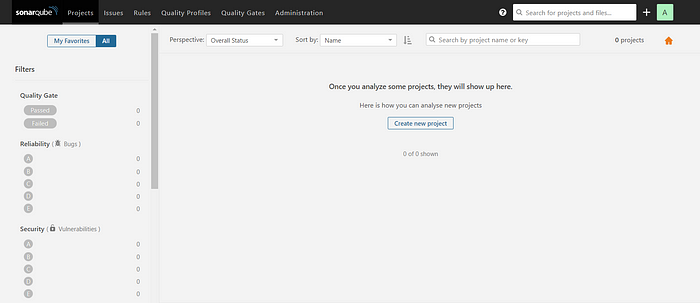

You will be directed to the Projects page. Click on Create new project.

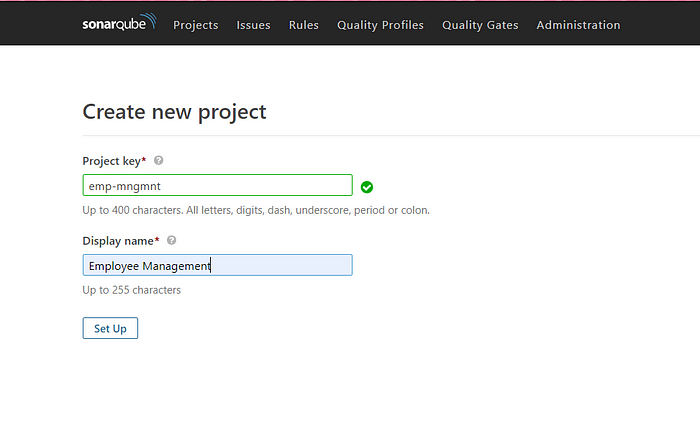

Enter a Project Key and a Display Name of choice. Note that you will be using the same values in your source code later.

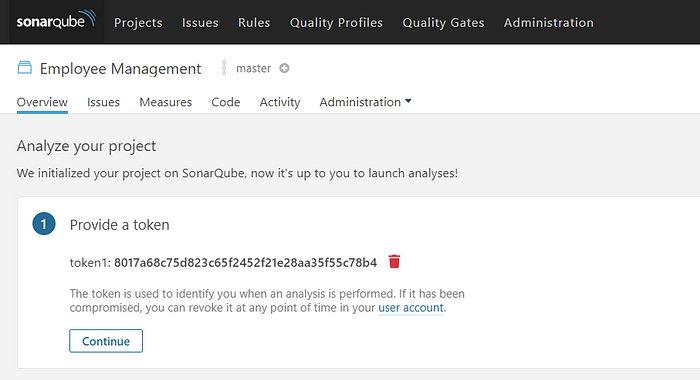

Next you will be asked to generate a token. Provide a unique token name (Eg: token1) and click Continue.

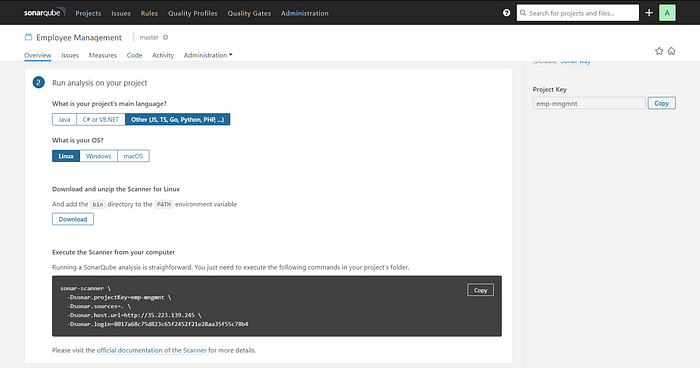

Select your project’s main language and the operating system.

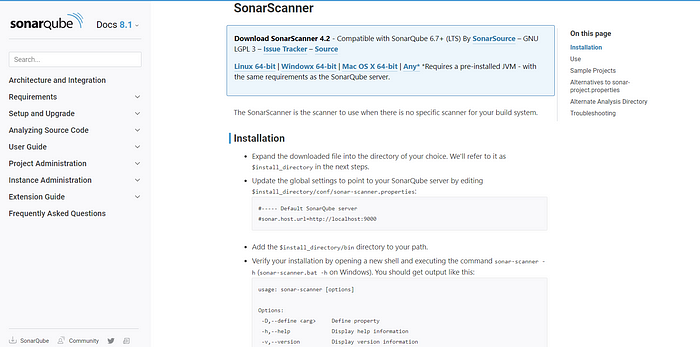

Next download the SonarScanner and follow the installation steps given for your operating system. You will also have to add the bin directory to the PATH environment variable.

Once SonarScanner has successfully installed, open your source code and create a file named sonar-project.properties. Add the following properties in the file created.

sonar.projectKey=<project-key>sonar.projectName=<project-display-name>sonar.projectVersion=<project-version-no>sonar.sourceEncoding=UTF-8sonar.sources=.

Add the Project Key and the Display Name which you gave previously in the respective rows.

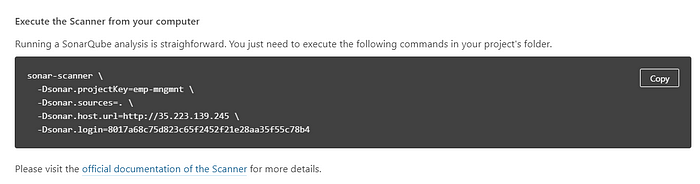

Now go back to the SonarQube page on the browser and copy the Scanner commands at the bottom to run the SonarScanner.

Go to the location of your source code (where the sonar-project.properties file is located ) and run the copied commands.

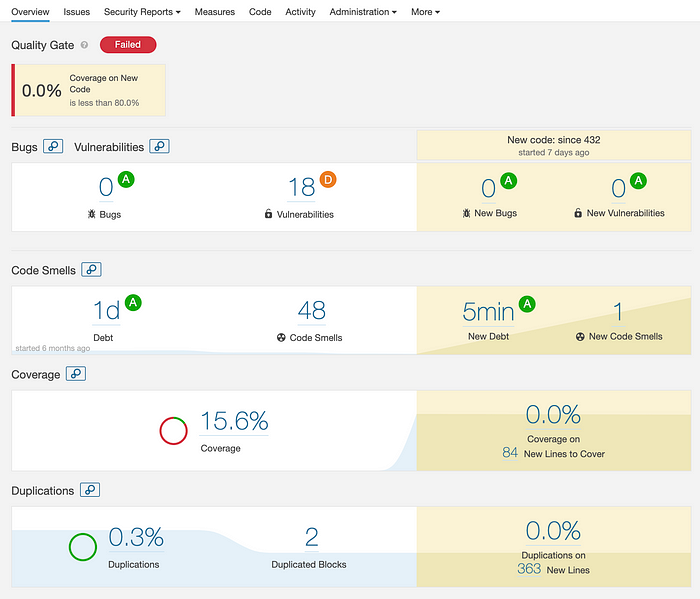

Now refresh the SonarQube page on the browser. You can see the quality issues in your code shown here.

Here we are presented with the bugs, vulnerabilities and code smells in our code. Coverage denotes the test coverage. Statistics about code duplication are also showed. The quality improvement since the last analysis is given on the right.

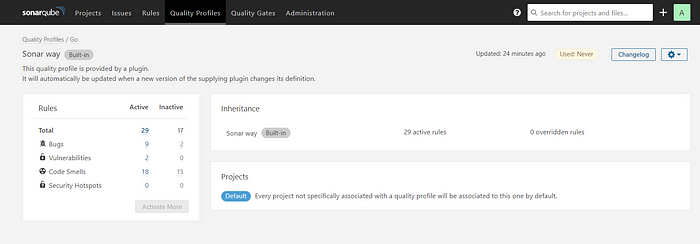

Also SonarQube includes features like quality profiles and quality gates. With quality profiles you can define a set of rules for a particular language for the quality check. There is a default profile already set for all available languages. You can create custom quality profiles and use it on your project.

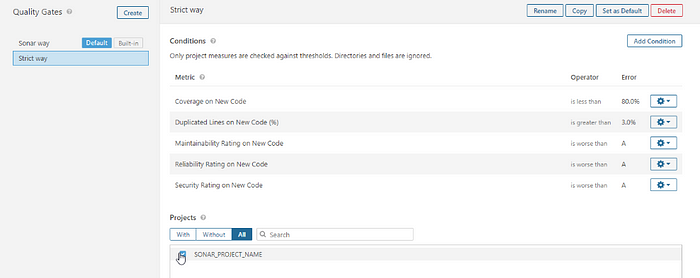

Quality gates let you define a set of boolean conditions based on measurement thresholds against which projects are measured.

If the defined conditions are met, the quality gate will ‘pass’ the code quality test.

Thursday, 16 March 2023

kubernetes troubleshooting

Kubernetes is a powerful container orchestration platform used to manage containerized applications at scale. While Kubernetes is generally reliable, there are some common issues that can arise during deployment, configuration, and maintenance. Here are some common Kubernetes issues and their fixes:

Container Image Pull Errors: If you're unable to pull an image from a container registry, it could be due to several reasons, such as network connectivity issues, authentication issues, or a wrong image name. You can fix this by checking your network connectivity, confirming the image name, and ensuring you have the necessary credentials to access the container registry.

Cluster Resource Limitations: If your Kubernetes cluster is experiencing slow performance or crashes, it could be due to resource limitations. You can fix this by scaling up your cluster's resources, such as increasing the number of nodes or upgrading the CPU and RAM of existing nodes.

Pod Scheduling Issues: If your pods aren't being scheduled to run on available nodes, it could be due to insufficient resources or pod affinity/anti-affinity rules. You can fix this by increasing the available resources, modifying the scheduling rules, or using node selectors to specify which nodes the pods should run on.

Service Discovery Issues: If your pods can't discover or connect to services in the cluster, it could be due to incorrect service configurations or issues with the DNS resolution. You can fix this by reviewing your service configurations and DNS settings, ensuring that your services are reachable via their DNS names, and using Kubernetes' built-in service discovery mechanisms.

Node or Cluster Failure: If your node or cluster fails, you can restore operations by creating new nodes or replacing failed nodes with new ones. You can also use Kubernetes' built-in replication and failover mechanisms to ensure that your applications remain available in the event of a node or cluster failure.

In general, many Kubernetes issues can be avoided by following best practices for deployment, configuration, and maintenance. These include regularly updating and patching your Kubernetes components, using resource quotas to prevent resource exhaustion, and monitoring your cluster and applications for performance issues.

Monday, 13 March 2023

ANSIBLE

ANSIBLE

installation on ubuntu:

vi ans.sh

apt update -y

apt install software-properties-common -y

apt-add-repository ppa:ansible/ansible -y

apt update -y

apt install ansible -y

Ansible --version

Sunday, 12 March 2023

DEVOPS_EXPERIENCED QUS & ANS

DEVOPS INTERVIEW QUESTIONS AND ANSWERS

GIT

1: What is Git flow??

Ans: Git flow is a branching model for Git that provides a

standardized approach to organizing the development and release of software. It

was first introduced by Vincent Driessen in 2010 and has since become a popular

model used by many software development teams.

At a high level, Git flow

involves two main branches: master and develop. The master branch is

used to store the official release history, while the develop branch is used as the main development branch.

In addition to these two main

branches, Git flow also defines a set of supporting branches that are used to

facilitate the development and release process. These include:

- Feature branches: Used to develop new

features for the software.

- Release branches: Used to prepare the

software for release.

- Hotfix branches: Used to quickly fix

critical bugs in the software.

The Git flow model emphasizes

the importance of code review and testing throughout the development process,

and also encourages frequent releases to ensure that changes are delivered to

users in a timely manner.

Overall, Git flow provides a

clear and structured approach to managing software development, making it

easier for teams to collaborate and coordinate their efforts.

2:Difference between git merge and git rebase??

Ans: Git merge and git rebase are two ways to integrate changes from

one branch into another branch in Git. Although they can achieve the same end

result, they do so in different ways, which can affect the commit history and

the way conflicts are handled.

The main difference between git

merge and git rebase is how they integrate the changes from one branch into

another:

- Git merge creates a new commit that

combines the changes from two or more branches. The new commit has two or

more parent commits and creates a merge point in the commit history.

- Git rebase moves the entire branch to a

new base commit and reapplies each commit from the old branch on top of

the new base commit. This results in a linear commit history with no merge

commits.

Here are some specific differences

between git merge and git rebase:

- Git merge preserves the commit history of

both branches, while git rebase rewrites the commit history of the

original branch.

- Git merge is a non-destructive operation

that does not change any existing commits, while git rebase modifies the

original branch's commit history by creating new commits.

- Git merge is useful for integrating

independent changes into a shared branch, while git rebase is useful for

integrating changes from one branch onto another while keeping a linear

commit history.

To summarize, git merge and git

rebase are both useful tools for integrating changes from one branch into

another in Git, but they have different effects on the commit history and

should be used in different situations depending on the goals of the project.

3: situation to use git

merge and git rebase?

The decision to use

git merge or git rebase largely depends on the specific situation and the

desired outcome of the integration of changes from one branch to another.

Git merge is

generally best suited for situations where you want to combine independent

branches with their own unique history. This is useful when multiple developers

are working on a project and making changes to a shared branch, but want to keep

their changes independent until they are ready to be merged together.

For example, if two

developers are working on separate features in their own feature branches and

want to merge them back into the main development branch, they can use git

merge to combine the changes into a new merge commit that integrates the

changes from both branches into the main branch.

On the other hand,

git rebase is useful for situations where you want to incorporate changes from

one branch onto another while maintaining a linear commit history. This is

particularly useful for keeping the commit history clean and easy to follow, as

it avoids the creation of extra merge commits that can clutter the history.

For example, if a

feature branch has been created off of the main development branch, and changes

have been made to both branches since then, you can use git rebase to move the

feature branch to the tip of the development branch and apply the changes from

both branches in a linear fashion.

In summary, git

merge is generally best for integrating independent branches with their own

unique history, while git rebase is useful for incorporating changes from one

branch onto another while maintaining a clean, linear commit history.

4:What is cherrypick?

Cherry-pick

is a Git command that allows you to pick a specific commit from one branch and

apply it to another branch. This can be useful when you want to selectively

apply changes from one branch to another, without having to merge the entire

branch.

To use cherry-pick, you first

need to identify the commit that you want to apply to another branch. You can

do this by running git log to view the commit history

and find the specific commit you want to cherry-pick.

Once you have identified the commit,

you can use the following command to cherry-pick it onto another branch:

pythonCopy code

git cherry-pick <commit-hash>

Where <commit-hash>

is the hash or ID of the commit you want to cherry-pick.

When you run this command, Git

will create a new commit in the current branch that contains the changes from

the cherry-picked commit. This new commit will have a different commit hash

than the original commit, but it will contain the same changes.

It's important to note that

cherry-picking a commit can result in conflicts if the changes in the commit

overlap with changes in the destination branch. If this happens, you will need

to resolve the conflicts manually before the cherry-pick can be completed.

Cherry-pick can be a powerful

tool for selectively applying changes from one branch to another, but it should

be used with care to avoid creating conflicts or breaking the commit history.

5:Difference between CVS and VCS??

CVS (Concurrent

Versions System) and VCS (Version Control System) are both types of software

tools that allow developers to manage changes to their source code over time.

However, there are some differences between the two.

CVS is a specific

type of VCS that was popular in the 1990s and early 2000s. It is a client-server

system that allows multiple developers to work on the same codebase at the same

time. CVS tracks changes to individual files in a repository and allows

developers to check out specific versions of the code, make changes, and commit

their changes back to the repository.

VCS, on the other

hand, is a broader term that refers to any software tool that allows developers

to manage changes to their code over time. This includes not only client-server

systems like CVS, but also distributed version control systems like Git and

Mercurial.

One of the main

differences between CVS and VCS is the way they handle branching and merging.

CVS uses a branch-based approach, where each developer creates their own branch

of the codebase to work on their changes, and merges their changes back into

the main branch when they are finished. VCS, on the other hand, typically uses

a distributed approach, where each developer has their own local copy of the

repository, and changes are merged together using tools like Git's merge and

rebase commands.

Another difference

is that VCS tools typically offer more advanced features than CVS, such as the

ability to work offline, the ability to easily create and manage multiple

branches, and more powerful tools for tracking changes and resolving conflicts.

In summary, CVS is a

specific type of VCS that was popular in the past, while VCS is a broader term

that encompasses a wide range of tools used for managing changes to source

code. VCS tools typically offer more advanced features than CVS and use a

distributed approach to branching and merging.

6: Difference between Git pull

and git fetch?

Ans: Both git pull and git

fetch are Git commands used to update a local repository with

changes made to a remote repository. However, they have different ways of

updating the local repository and handling conflicts.

git

fetch downloads new changes from the remote repository,

but it does not integrate those changes with the local repository. It only

updates the remote tracking branches, which are references to the state of the

remote branches. This means that when you run git

fetch, you are not changing any of your local files or

branches.

On the other hand, git pull downloads new changes from the remote

repository and integrates those changes with the local repository. It is

essentially a combination of git

fetch followed by git

merge. This means that when you run git pull, you are actually changing your local

files and branches to match the state of the remote repository.

If there are

conflicts between the local and remote repositories, git fetch will not attempt to resolve them,

while git pull will

automatically try to merge the changes. This can sometimes lead to conflicts

that need to be resolved manually.

In summary, git fetch only downloads changes from the remote

repository and updates the remote tracking branches, while git pull downloads and integrates changes with

the local repository. It's generally recommended to use git fetch first to see what changes have been

made to the remote repository, and then use git

pull to integrate those changes into the local repository.

7: what is git statsh?

git stash is a Git command that allows you to temporarily

save changes to your working directory and index without committing them to

your Git repository. This can be useful when you need to switch to a different

branch, but don't want to commit your changes yet.

When you run git stash, Git will

save your changes to a special area called the "stash". This area is

not part of your Git repository, and is not tracked by Git. It's simply a place

to store changes temporarily.

Once you have stashed your changes, you can switch

to a different branch or commit without worrying about your changes conflicting

with other changes in the repository. You can also apply your stashed changes

to a different branch or commit later on.

To apply your stashed changes, you can use the git stash apply command. This will apply the most

recent stash to your working directory and index. If you have multiple stashes,

you can specify which stash to apply using the git stash apply stash@{n}

command, where n is the index of the stash you want to apply.

You can also list the stashes you have saved using

the git stash list command, and remove a stash using the git stash drop command.

In summary, git stash is a Git

command that allows you to temporarily save changes to your working directory

and index without committing them to your Git repository. This can be useful

when you need to switch to a different branch or commit without committing your

changes yet.

MAVEN

1.

What is Maven, and how does it work? Maven is a build automation tool

used for building and managing projects in Java. It follows the build lifecycle

and uses a Project Object Model (POM) file to describe the project structure, dependencies,

and build process.

2.

What is the POM file, and what does it contain? The Project Object Model

(POM) file is an XML file that contains information about the project, such as

its group ID, artifact ID, version, and dependencies. It also contains information

about the build process, such as the plugins, goals, and phases to execute.

3.

What are the advantages of using Maven? Maven provides several

advantages, such as dependency management, standardized project structure, and

automated build processes. It also helps to reduce development time and

increase productivity by providing a centralized repository for dependencies

and plugins.

4.

What is a Maven repository, and what is its purpose? A Maven repository

is a centralized location for storing and sharing project dependencies and

plugins. It provides a way for developers to easily manage and retrieve

dependencies and plugins required for their projects.

5.

What are Maven plugins, and how are they used? Maven plugins are

extensions that provide additional functionality to the build process. They are

used to perform tasks such as compiling code, running tests, and packaging the

project. Plugins can be configured in the POM file and executed during specific

build phases.

6.

How do you create a Maven project using the command line? To create a

Maven project using the command line, use the following command: mvn

archetype:generate -DgroupId=com.example.project -DartifactId=my-project

-DarchetypeArtifactId=maven-archetype-quickstart -DinteractiveMode=false

This will create a new Maven

project with the group ID "com.example.project," artifact ID

"my-project," and a basic project structure.

7.

How do you run a Maven build using the command line? To run a Maven

build using the command line, navigate to the project directory and use the

following command: mvn clean install

This will clean the project,

compile the source code, run the tests, and package the project into a JAR

file.

8.

What is the Maven Central Repository, and how is it used? The Maven

Central Repository is a public repository that contains a large number of

open-source Java libraries and dependencies. It can be used to search for and

retrieve dependencies required for a project, and can be configured as a

repository in the POM file.

9.

What is the difference between a snapshot and a release version in

Maven? A snapshot version is a development version of a project that is subject

to change. It is used to indicate that a project is still in active development

and should not be considered stable. A release version, on the other hand, is a

stable version of a project that has been tested and is ready for deployment.

10.

How can you exclude a dependency from a Maven project? To exclude a

dependency from a Maven project, you can add an exclusion element to the

dependency in the POM file. For example:

<dependency>

<groupId>com.example</groupId>

<artifactId>my-dependency</artifactId>

<version>1.0.0</version> <exclusions> <exclusion>

<groupId>org.springframework</groupId> <artifactId>spring-core</artifactId>

</exclusion> </exclusions> </dependency>

This will exclude the

"spring-core" dependency from the "my-dependency"

dependency.

Jenkins

what is CI CD?

CI/CD stands for Continuous

Integration/Continuous Deployment. It is a set of practices that are used to

automate the software development process, from building and testing to

deployment and delivery.

Continuous Integration (CI) is the process of

automatically building and testing code changes every time they are made. The

goal of CI is to catch bugs early in the development process and to ensure that

the code is always in a releasable state.

Continuous Deployment (CD) is the process of

automatically deploying code changes to production after they have been built

and tested. The goal of CD is to streamline the release process and to make it

faster and more reliable.

Together, CI/CD enables teams to deliver

high-quality software faster and more frequently, while reducing the risk of

errors and downtime. It is a key practice in DevOps, which emphasizes collaboration

and automation between development and operations teams.

2:

how to secure jenkins?

Securing Jenkins is

important to ensure that only authorized users have access to the system and

its resources. Here are some steps to help secure Jenkins:

1. Use strong passwords: Ensure that all user accounts have strong,

unique passwords that are difficult to guess. It's also a good practice to

enforce password policies, such as requiring passwords to be changed regularly.

2. Enable security features: Jenkins has built-in security

features, such as authentication and authorization. Configure these features to

ensure that only authorized users have access to the system.

3. Limit network access: Jenkins should only be accessible from

authorized networks and IPs. Use firewalls and network security groups to

restrict access to the Jenkins server.

4. Use SSL/TLS encryption: Enable SSL/TLS encryption for Jenkins to

secure communication between the server and clients.

5. Keep Jenkins up-to-date: Make sure that Jenkins and its plugins

are kept up-to-date with the latest security patches and fixes.

6. Use plugins for security: There are many security plugins

available for Jenkins, such as the Role-based Authorization Strategy plugin,

that can help you further secure your Jenkins installation.

7. Use a reverse proxy: Consider using a reverse proxy, such as

Apache or Nginx, to secure the Jenkins server and add an additional layer of

security.

By following these

steps, you can help ensure that your Jenkins installation is secure and protected

from unauthorized access.

how to configure security in jenkins?

Configuring security in Jenkins involves

several steps, including:

1.

Enable

Security: First, you need to enable security in Jenkins. Go to "Manage

Jenkins" -> "Configure Global Security" -> "Enable

Security".

2.

Choose an

Authentication Method: Select an authentication method for Jenkins. Jenkins

supports built-in user accounts, LDAP/Active Directory, and SAML.

3.

Configure

User Accounts: Create user accounts for each user who needs access to Jenkins.

Ensure that all user accounts have strong, unique passwords, and implement

two-factor authentication where possible.

4.

Configure

Authorization: Configure authorization by using the built-in Role-based

Authorization Strategy plugin to restrict access to Jenkins resources based on

user roles and permissions.

5.

Configure

Jenkins Security Realm: Configure the Jenkins Security Realm by setting it to a

secure value, such as "Jenkins' own user database" or an LDAP

directory that is not publicly accessible.

6.

Configure

Job Level Security: Implement job level security by restricting access to jobs

based on user roles and permissions.

7.

Configure

Plugin Management: Limit the number of plugins installed on your Jenkins server

to only the ones that are required. Keep them up-to-date with the latest

security patches and fixes.

8.

Secure

Build Environment: Configure the build environment to run in a secure mode by

using containerization and implementing security practices such as file system

encryption.

By following these steps, you can configure

security in Jenkins and protect your valuable software assets and sensitive

information from unauthorized access.

Difference between declarative and scripted

pipeline in jenkins?

Declarative and Scripted Pipelines are two

ways to define pipelines in Jenkins.

Declarative Pipeline:

Declarative Pipeline is a newer, more user-friendly

approach to defining pipelines in Jenkins. It uses a domain-specific language

(DSL) based on YAML syntax, which allows you to define the pipeline as a series

of stages and steps. Declarative pipelines provide a simplified syntax and

offer a more structured approach to pipeline definition. It is more

opinionated, enforcing best practices and providing default behaviors.

Some key features of Declarative Pipeline are:

- Declarative Pipelines

define the stages and steps of the pipeline in a clean and concise way.

- Declarative Pipelines

allow you to easily enforce best practices and code reuse across all

pipelines.

- Declarative Pipelines

support parallel stages and conditional logic.

Scripted Pipeline:

Scripted Pipeline is the original, more flexible

way of defining pipelines in Jenkins. It uses Groovy-based DSL, which allows

you to define the pipeline using Groovy scripts. Scripted pipelines are more

flexible than declarative pipelines and can be used for more complex build

processes.

Some key features of Scripted Pipeline are:

- Scripted Pipelines

provide more control and flexibility over the pipeline execution process.

- Scripted Pipelines

allow you to define and reuse custom functions in your scripts.

- Scripted Pipelines are

more verbose than Declarative Pipelines, making it more challenging to

maintain.

In summary, Declarative Pipelines are a more

opinionated, structured way of defining pipelines, while Scripted Pipelines are

more flexible and can be used for more complex build processes.

jenkins workspace location?

The workspace in Jenkins is the location on

the file system where Jenkins jobs are executed. By default, the workspace is

located in the Jenkins home directory under the "workspace"

directory. The exact location of the workspace varies depending on the

operating system and the installation method used.

On Unix-like systems (e.g., Linux, macOS), the

workspace is typically located at:

/var/lib/jenkins/workspace/<job-name>

On Windows systems, the workspace is typically

located at:

C:\Program Files

(x86)\Jenkins\workspace\<job-name>

You can also configure the workspace location for

individual jobs by specifying a custom workspace directory in the job

configuration. To do this, go to the job configuration page and scroll down to

the "Advanced Project Options" section. Then, specify a custom

workspace directory in the "Use custom workspace" field.

upstream and downstream in jenkins?

In Jenkins, upstream and downstream jobs refer

to the relationships between different jobs in a build pipeline.

Upstream Job:

An upstream job is a job that triggers another job.

In a build pipeline, the upstream job is usually the job that is triggered

first, and it triggers downstream jobs as a result of its successful

completion. Upstream jobs can pass parameters to downstream jobs, which can be

used to configure the downstream job's execution.

Downstream Job:

A downstream job is a job that is triggered by an

upstream job. In a build pipeline, a downstream job typically depends on the

successful completion of its upstream job(s). Downstream jobs can also access

artifacts and other resources generated by their upstream job(s).

For example, consider a build pipeline where Job A

triggers Job B, which triggers Job C. In this scenario, Job A is the upstream

job for Job B, and Job B is the upstream job for Job C. If Job A fails, Jobs B

and C will not be triggered, and the build pipeline will stop. If Job A

succeeds but Job B fails, Job C will not be triggered, and the build pipeline

will stop after Job B.

Upstream and downstream relationships can be useful

for creating complex build pipelines that involve multiple jobs and dependencies.

By defining upstream and downstream relationships, you can create a robust,

reliable build process that ensures that each job is executed in the correct

order and that dependencies are properly managed.

master slave in jenkins

In Jenkins, a master-slave architecture is a

distributed model where the workload is distributed across multiple Jenkins

instances. The Jenkins master is the main controller that manages the build

system, and Jenkins slaves are additional nodes that perform the actual builds.

The master-slave architecture allows you to scale your Jenkins build system by

adding more nodes as needed.

The Jenkins master is responsible for scheduling

builds and distributing them to the available slaves. The master node also

manages the configuration of the build system and stores the job configurations

and build history. The Jenkins master node can be run on any machine that meets

the Jenkins system requirements.

The Jenkins slave is a node that performs the

actual build tasks. A slave node can be run on any machine that can access the

Jenkins master over the network. Jenkins slaves can be configured to run on

different operating systems or with different software configurations,

providing additional flexibility for your build system.

To set up a Jenkins master-slave architecture, you

need to:

1.

Set up the

Jenkins master node

2.

Set up one

or more Jenkins slave nodes

3.

Configure

the Jenkins master to use the slave nodes

4.

Configure

the jobs to run on the slave nodes

You can configure the master and slave nodes using

the Jenkins web interface or by using Jenkins configuration files. When the

Jenkins master schedules a build, it checks the available slave nodes to

determine where the build should be executed. The build is then sent to the

selected slave node for execution. Once the build is completed, the results are

sent back to the Jenkins master for display in the web interface.

Using a master-slave architecture in Jenkins

provides several benefits, including increased scalability, improved

reliability, and better resource utilization. By distributing the build

workload across multiple nodes, you can reduce build times and increase the

number of builds that can be executed simultaneously.

Webhooks in Jenkins

are a mechanism that allows external systems to automatically trigger builds in

Jenkins when certain events occur. With webhooks, Jenkins can listen for HTTP

POST requests from external systems, which can contain information about a

specific event, such as a code commit, pull request, or issue update.

Webhooks are useful

for automating your build process and reducing the need for manual

intervention. By configuring webhooks, you can ensure that your builds are

triggered automatically when certain events occur, such as when code is pushed

to a repository or when a pull request is created.

To configure

webhooks in Jenkins, you need to perform the following steps:

1. Configure Jenkins to listen for webhooks: To configure Jenkins

to listen for webhooks, you need to install and configure a Jenkins plugin

called "Generic Webhook Trigger." This plugin allows Jenkins to

listen for HTTP POST requests from external systems.

2. Create a webhook in the external system: To trigger builds in

Jenkins using a webhook, you need to create a webhook in the external system

that will send HTTP POST requests to Jenkins when a specific event occurs. For

example, if you are using GitHub as your version control system, you can create

a webhook that sends an HTTP POST request to Jenkins whenever a code commit is

pushed to a specific branch.

3. Configure the build job in Jenkins: After configuring Jenkins to

listen for webhooks and creating a webhook in the external system, you need to

configure the build job in Jenkins to use the webhook trigger. You can

configure the build job to perform specific actions when the webhook is

triggered, such as running a specific build script, sending notifications, or

publishing artifacts.

Overall, webhooks in

Jenkins provide a powerful way to automate your build process and reduce manual

intervention. By configuring webhooks, you can ensure that builds are triggered

automatically when specific events occur, streamlining your build process and

increasing your team's productivity.

stages in jenkins pipeline

In Jenkins pipeline, stages are a fundamental

concept that allow you to divide your pipeline into smaller, more manageable

units of work. Stages represent individual phases in your pipeline, such as

build, test, and deploy, and allow you to define specific actions that should be

performed within each phase.

Stages in Jenkins pipeline are defined using the

"stage" directive, which allows you to specify a name for the stage

and a set of actions that should be performed within the stage. For example,

the following pipeline defines three stages: "build",

"test", and "deploy":

pipeline {

agent any

stages {

stage('Build') {

steps {

// Perform build actions

}

}

stage('Test') {

steps {

// Perform test actions

}

}

stage('Deploy') {

steps {

// Perform deploy actions

}

}

}

}

In this example, each stage contains a set of

actions that should be performed as part of that stage. For example, the

"build" stage might include steps to compile code and generate

artifacts, while the "test" stage might include steps to run unit

tests and integration tests.

By breaking your pipeline into stages, you can easily

visualize the progress of your pipeline and identify any bottlenecks or issues

that may be slowing down your pipeline. You can also parallelize stages to

speed up your pipeline, by running multiple stages in parallel.

Overall, stages are a powerful concept in Jenkins

pipeline that allow you to break your pipeline into smaller, more manageable

units of work, making it easier to build, test, and deploy your applications.

ANSIBLE

features of ansible?

Ansible is an open-source automation tool that

provides a number of features that make it a popular choice for IT automation

and configuration management. Some of the key features of Ansible include:

1.

Agentless:

Ansible does not require any agents to be installed on remote hosts, making it

easy to get started and manage.

2.

Easy to

learn and use: Ansible uses a simple, easy-to-read syntax that makes it easy to

write and understand playbooks.

3.

Flexible

and extensible: Ansible provides a wide range of modules and plugins that can

be used to extend its functionality and integrate with other tools.

4.

Idempotent:

Ansible uses a declarative approach to automation, which means that playbooks

can be run multiple times without changing the end result.

5.

Multi-tier

orchestration: Ansible can be used to orchestrate complex multi-tier

applications and manage the configuration of multiple hosts and services.

6.

Task

automation: Ansible can be used to automate a wide range of IT tasks, such as

configuration management, application deployment, and infrastructure

provisioning.

7.

Community-driven:

Ansible is backed by a large and active community of contributors, who create

and maintain modules, playbooks, and other resources that make it easy to get

started with Ansible.

Overall, Ansible is a powerful and flexible automation

tool that provides a wide range of features and benefits for IT automation and

configuration management. Its agentless architecture, easy-to-learn syntax, and

wide range of modules and plugins make it a popular choice for automating IT

tasks of all kinds.

Difference between ansible and terraform?

Ansible and Terraform are two popular tools

used in IT automation and infrastructure management, but they have different

features and use cases.

1.

Configuration

Management vs. Infrastructure as Code: Ansible is a configuration management

tool, while Terraform is an infrastructure as code tool. Ansible is used to

manage the configuration of individual servers and services, while Terraform is

used to provision and manage entire infrastructure components, such as

networks, virtual machines, and load balancers.

2.

Procedural

vs. Declarative: Ansible uses a procedural approach to automation, where

playbooks define the series of steps needed to configure a system. Terraform,

on the other hand, uses a declarative approach, where the desired state of

infrastructure is defined, and Terraform handles the provisioning and

configuration.

3.

Agentless

vs. Agent-based: Ansible is an agentless tool, meaning it doesn't require any

agents or software to be installed on the target servers, while Terraform uses

agents to manage and provision infrastructure.

4.

Applicability:

Ansible is best suited for managing configuration of individual servers and

services, such as installing software, managing users, and configuring

applications. Terraform, on the other hand, is best suited for managing

infrastructure components, such as creating and managing cloud resources,

networking components, and load balancers.

5.

Learning

Curve: Ansible has a relatively low learning curve, thanks to its easy-to-learn

and simple YAML syntax, making it accessible to new users. Terraform has a

steeper learning curve, due to its advanced functionality and the need to learn

the HashiCorp Configuration Language (HCL).

Overall, Ansible and Terraform are complementary

tools that can be used together to automate different aspects of IT

infrastructure. Ansible is best suited for managing configuration of individual

servers and services, while Terraform is best suited for provisioning and

managing infrastructure components.

What is ansible vault?

Ansible Vault is a feature in Ansible that

provides secure storage for sensitive data, such as passwords, keys, and other

credentials. It allows you to encrypt files or individual variables, so that

they can be stored and shared securely across your Ansible projects.

The Ansible Vault uses Advanced Encryption Standard

(AES) encryption to secure the sensitive data, and requires a password or

passphrase to decrypt the data when needed. This ensures that sensitive

information is protected from unauthorized access, and can only be accessed by

authorized users who have the decryption key.

To use Ansible Vault, you create a

password-protected file called a Vault file, which contains the sensitive data.

You can then reference the Vault file in your Ansible playbooks and roles, and

Ansible will automatically decrypt the data when needed.

Ansible Vault supports several modes of operation,

including encrypting entire files, encrypting individual variables within

files, and rekeying existing Vault files. It also provides a number of commands

and options to manage the Vault files, such as creating, editing, and deleting

Vault files, and changing the encryption password or passphrase.

Overall, Ansible Vault provides a secure and

easy-to-use solution for storing and sharing sensitive data across your Ansible

projects, while ensuring that the data is protected from unauthorized access.

1.

Creating an encrypted file: To create an encrypted file using Ansible

Vault, you can use the "ansible-vault create" command, followed by

the name of the file you want to create. You will then be prompted to enter a

password for the file:

ansible-vault

create secrets.yml

You can then add sensitive data

to the file, such as passwords or API keys, and save the file. The data will be

automatically encrypted using the password you entered.

2.

Editing an encrypted file: To edit an existing encrypted file, you can

use the "ansible-vault edit" command, followed by the name of the

file you want to edit. You will be prompted to enter the password for the file:

ansible-vault

edit secrets.yml

You can then edit the file as

you normally would, and the changes will be automatically encrypted.

3.

Using an encrypted variable: To use an encrypted variable in your

Ansible playbook, you can define the variable in an encrypted file and

reference it in your playbook using the "vault_" prefix. For example,

if you have an encrypted file called "secrets.yml" containing a

variable called "db_password", you can reference it in your playbook

like this:

- name:

Configure database

mysql_user:

name: db_user

password: "{{ vault_db_password

}}"

When Ansible runs the playbook,

it will automatically decrypt the "db_password" variable using the

password you entered when you created the "secrets.yml" file.

4.

Rekeying an existing encrypted file: If you want to change the password

for an existing encrypted file, you can use the "ansible-vault rekey"

command, followed by the name of the file you want to rekey. You will be

prompted to enter the old password and the new password:

ansible-vault

rekey secrets.yml

Once you enter both passwords,

Ansible will automatically re-encrypt the file using the new password.

These are just a few examples of

how to use Ansible Vault. With Ansible Vault, you can store and share sensitive

data securely across your Ansible projects, without having to worry about

unauthorized access.

Ansible playbooks with

explanation?

---

- name: Configure web server

hosts: web

become: true

tasks:

- name: Install Apache web

server

apt:

name: apache2

state: present

- name: Configure Apache virtual

host

template:

src:

/etc/apache2/sites-available/example.com.conf.j2

dest: /etc/apache2/sites-available/example.com.conf

owner: root

group: root

mode: '0644'

notify:

- restart Apache

- name: Enable Apache virtual

host

file:

src:

/etc/apache2/sites-available/example.com.conf

dest: /etc/apache2/sites-enabled/example.com.conf

state: link

notify:

- restart Apache

handlers:

- name: restart Apache

service:

name: apache2

state: restarted

1. The first line of the playbook is a YAML file declaration. The

"---" at the top is required to signify the start of a YAML file.

2. The "name" field is a descriptive name for the

playbook. It is used for informational purposes only.

3. The "hosts" field specifies the target hosts or groups

for the playbook. In this example, the playbook will run on hosts that are

members of the "web" group.

4. The "become" field is set to "true" to

enable privilege escalation, allowing the playbook to run with elevated

privileges (e.g. as the root user).

5. The "tasks" field is a list of tasks to be executed on

the target hosts.

6. Each task begins with a "name" field, which is a

descriptive name for the task.

7. The "apt" module is used to install the Apache web

server package on the target host. The "name" field specifies the

package name, and the "state" field is set to "present" to

ensure that the package is installed.

8. The "template" module is used to configure an Apache

virtual host. The "src" field specifies the path to a Jinja2 template

file, and the "dest" field specifies the path where the rendered template

will be saved. The "owner", "group", and "mode"

fields are used to set the file permissions and ownership. The

"notify" field is used to specify the name of the handler that should

be triggered when the task is complete.

9. The "file" module is used to enable the Apache virtual

host by creating a symbolic link to the configuration file in the

"sites-enabled" directory. The "src" field specifies the

source file, and the "dest" field specifies the destination file. The

"state" field is set to "link" to create a symbolic link.

The "notify" field is used to specify the name of the handler that

should be triggered when the task is complete.

10.

The "handlers" field defines a list of

handlers that can be triggered by tasks in the playbook. Handlers are used to perform

actions that should be run once all the related tasks have completed. In this

example, the "restart Apache" handler is defined, which uses the

"service" module to restart the Apache web server.

That's a brief

explanation of the various parts of an Ansible playbook. Playbooks can be much

more complex and can include many more tasks, modules, and variables. The power

of Ansible lies in its ability to automate complex workflows and orchestrate

them across multiple hosts and environments.

Ansible galaxy?

Ansible Galaxy is a central repository for

Ansible roles, collections, and modules. It is an online platform where Ansible

users can share and collaborate on pre-built code and automation solutions.

Using Ansible Galaxy, you can find and download

pre-built roles and collections to use in your own playbooks. You can also

upload your own roles and collections to share with the Ansible community.

Roles and collections available on Ansible Galaxy

are reviewed and tested by the Ansible community, which helps to ensure their

quality and reliability. You can search for roles and collections by name,

author, tags, and other criteria.

Ansible Galaxy also provides a command-line

interface that you can use to manage your roles and collections. You can use

the ansible-galaxy command to install roles from Galaxy,

create your own roles, and upload your roles to Galaxy.

Overall, Ansible Galaxy is a valuable resource for

Ansible users, as it makes it easy to find and share pre-built code and

automation solutions, saving time and effort in developing your own Ansible

playbooks.

Ansible facts?

In Ansible, facts are pieces of information

about a target system that can be used by Ansible playbooks and tasks to

perform operations or make decisions. Ansible facts are collected by default

when an Ansible playbook is run, and they are stored as variables that can be

accessed by tasks and playbooks.

Ansible facts can include information about the

target system's hardware, operating system, network configuration, environment

variables, and more. Some common facts that can be collected by Ansible

include:

- ansible_hostname: The hostname of the

target system.

- ansible_fqdn: The fully-qualified

domain name of the target system.

- ansible_os_family: The family of operating

system running on the target system (e.g., Debian, RedHat, Windows).

- ansible_distribution: The name of the Linux

distribution running on the target system (e.g., Ubuntu, CentOS, Fedora).

- ansible_architecture: The CPU architecture

of the target system (e.g., x86_64, arm64).

You can use the ansible_facts

variable to access all of the facts collected by Ansible in a playbook or task.

For example, you could use the following task to print the hostname of the

target system:

- name: Print hostname

debug:

var: ansible_hostname

Ansible

also allows you to customize and extend the facts that it collects by defining

your own custom facts. Custom facts can be defined using scripts written in any

language that can be executed on the target system, and they can be used to

provide additional information about the target system that is specific to your

environment.

Ansible roles with explanation?

In Ansible, a role is a way to organize and

reuse tasks, handlers, files, templates, and other resources in a structured

way. A role is essentially a collection of related tasks and files that can be

used to configure a specific aspect of a system, such as a web server or a

database.

A typical Ansible role contains the following

directories:

- defaults: This directory

contains default variables for the role. These variables are used if no

other value is specified in the playbook or inventory file.

- vars: This directory

contains variables that are specific to the role. These variables can be

used to override the default variables or to provide additional

information that is required by the role.

- tasks: This directory

contains the main tasks for the role. These tasks are executed when the

role is applied to a target system.

- handlers: This directory

contains handlers, which are tasks that are triggered by events in other

tasks. Handlers are typically used to restart services or reload

configurations after they have been changed.

- templates: This directory

contains Jinja2 templates that are used to generate configuration files or

other text files for the target system.

- files: This directory

contains files that are copied to the target system as-is. These files are

typically used for static configuration files or other data files that do

not require any modification.

- meta: This directory

contains metadata for the role, such as the dependencies that the role

requires.

Roles can be downloaded from Ansible Galaxy or

created manually. Once you have created a role, you can include it in your

playbook by specifying its name in the roles section of the

playbook. For example, to include a role named webserver, you would

use the following syntax:

-

name: Configure web server

hosts: webserver

roles:

- webserver

When this playbook

is run, Ansible will automatically search for the webserver role in the roles directory of the current playbook

directory, and execute the tasks, handlers, and other resources contained in

the role.

Roles are a powerful

way to organize and reuse Ansible code, and they make it easy to create

modular, maintainable, and scalable automation solutions.

Ansible roles with

example?

webserver/

├── defaults/

│ └── main.yml

├── files/

│ └── index.html

├── handlers/

│ └── main.yml

├── meta/

│ └── main.yml

├── tasks/

│ ├── main.yml

│ └── install.yml

├── templates/

│ └── apache.conf.j2

├── vars/

│ └── main.yml

└──

README.md

In this example, the webserver role contains the following directories:

- defaults: This directory

contains default variables for the role, which are defined in main.yml. For example, you could define a default

variable for the Apache port number.

- files: This directory

contains a sample index.html file that will be

copied to the target system as-is.

- handlers: This directory

contains handlers for the role, which are defined in main.yml. For example, you could define a handler

to restart the Apache service after a configuration file has been changed.

- meta: This directory

contains metadata for the role, such as the dependencies that the role

requires.

- tasks: This directory

contains the main tasks for the role, which are defined in main.yml and install.yml.

For example, install.yml could contain

tasks to install Apache and its dependencies, while main.yml could contain tasks to configure Apache.

- templates: This directory

contains Jinja2 templates that will be used to generate configuration

files for Apache on the target system.

- vars: This directory

contains variables that are specific to the role, which are defined in main.yml. For example, you could define a variable

for the Apache log directory.

Here's

an example of how you could use this role in an Ansible playbook:

-

name: Configure web server

hosts: webserver

roles:

- webserver

When this playbook

is run, Ansible will automatically execute the tasks, handlers, and other

resources contained in the webserver

role on the webserver

host. The result will be a configured and running Apache web server on the

target system.

Note that this is

just a basic example of an Ansible role, and real-world roles can be much more

complex and contain many more files and directories. However, the basic

structure and organization of a role should be similar to what is shown here.

templates in ansible

with example/

In Ansible,

templates are used to dynamically generate configuration files that are

specific to a target host. The templates are written in Jinja2 syntax, which

allows you to include variables and logic to create more complex configuration

files. Here's an example of how to use templates in Ansible:

Let's say you have a

template file called nginx.conf.j2

that looks like this:

worker_processes

{{ nginx_worker_processes }};

pid

/run/nginx.pid;

events

{

worker_connections 1024;

}

http

{

server {

listen {{ nginx_listen_port }};

server_name {{ nginx_server_name }};

root {{ nginx_document_root }};

location / {

try_files $uri $uri/ =404;

}

}

}

In this template,

you can see that there are several variables enclosed in double curly braces

(e.g. {{ nginx_worker_processes }}).

These variables will be replaced with values specific to each target host when

the template is rendered.

To use this template

in an Ansible playbook, you would first define the variables you want to use in

the template. Here's an example vars

section of a playbook that defines some values for these variables:

vars:

nginx_worker_processes: 4

nginx_listen_port: 80

nginx_server_name: example.com

nginx_document_root: /var/www/html

Once

you have defined these variables, you can use the template module to

render the template and generate the configuration file on the target host.

Here's an example task that does this:

-

name: Render nginx configuration file

template:

src: nginx.conf.j2

dest: /etc/nginx/nginx.conf

This task will copy

the nginx.conf.j2

template to the target host, replace the variables with their defined values,

and save the resulting configuration file to /etc/nginx/nginx.conf.

Note that in order

to use templates in your playbook, you will need to have Jinja2 installed on

the target host. You can install Jinja2 using the pip package manager or your distribution's

package manager.

Dynaminc inventory in

ansible?

Dynamic inventory in

Ansible is a feature that allows you to automatically discover and group hosts

based on a defined inventory script or plugin. This is useful in situations

where you have a large number of hosts that are constantly changing, such as in

cloud environments or container orchestration platforms.

Ansible comes with

several built-in inventory plugins, including the ec2 plugin for Amazon Web Services, the gce plugin for Google Cloud Platform, and the docker plugin for Docker containers. You can

also write your own inventory scripts in Python or other languages, or use

third-party inventory plugins.

To use a dynamic

inventory script or plugin in Ansible, you simply specify it in your ansible.cfg configuration file or on the command

line using the -i or --inventory option. For example:

ansible-playbook

myplaybook.yml -i myinventory.py

In this example, myinventory.py is a Python script that defines

the inventory of hosts that Ansible should use. The script should output a JSON

document that describes the inventory, including hostnames, IP addresses, and

any variables or groups that the hosts belong to.

Here's an example of

a simple dynamic inventory script for AWS EC2 instances:

#!/usr/bin/env

python

import

boto3

ec2 =

boto3.client('ec2')

response

= ec2.describe_instances()

inventory

= {}

for

reservation in response['Reservations']:

for instance in reservation['Instances']:

hostname = instance['PublicDnsName']

inventory[hostname] = {

'ansible_host':

instance['PublicIpAddress'],

'ansible_user': 'ubuntu',

'ansible_ssh_private_key_file':

'~/.ssh/mykey.pem',

'tags': instance['Tags']

}

print(json.dumps(inventory))

This script uses the

Boto3 library to query the EC2 API and generate an inventory of instances. It

sets some Ansible-specific variables for each host, such as the SSH private key

to use, and includes any tags assigned to the instances as Ansible groups.

With this inventory

script, you can run Ansible commands and playbooks against your EC2 instances

using the ec2 inventory

plugin:

ansible all -m ping -i myinventory.py

This

command will use the myinventory.py script to

discover the EC2 instances, and then run the ping module on

all of them.

Docker

difference between image and container

In the context of Docker, an image is a

lightweight, standalone, and executable package that includes everything needed

to run an application, such as the code, dependencies, and system libraries.

Images are typically built from a Dockerfile, which is a text file that

contains instructions for building the image. Once an image is built, it can be

used to create one or more containers.

A container, on the other hand, is a runtime

instance of an image. Containers provide an isolated environment for running an

application and its dependencies, without the need for a separate operating

system or virtual machine. Containers share the kernel of the host operating

system and are ephemeral, meaning that any changes made inside a container are

not persisted when the container is stopped or deleted.

In simpler terms, an image is like a recipe or a

blueprint for creating a container, while a container is like a running

instance of that image, with its own isolated environment.

Difference between VM and container

Both virtual machines (VMs) and containers are

ways to run applications in an isolated environment, but there are some key

differences between the two:

1.

Architecture:

A VM runs on top of a hypervisor, which virtualizes the underlying hardware

resources, including CPU, memory, and storage. Each VM runs its own guest

operating system, which can be different from the host operating system. In

contrast, a container runs on top of the host operating system's kernel, and

does not require a separate guest operating system.

2.

Isolation:

A VM provides full hardware-level isolation, meaning that each VM has its own

virtualized CPU, memory, and storage resources. In contrast, a container

provides process-level isolation, meaning that each container shares the host operating

system's resources, but has its own isolated environment for running

applications and dependencies.

3.

Footprint:

VMs are typically larger and heavier than containers, because they require a

complete guest operating system and virtualized hardware resources. Containers

are lightweight and have a smaller footprint, because they share the host

operating system's kernel and do not require a separate guest operating system.

4.

Performance:

VMs may have a higher overhead and lower performance than containers, because

of the additional layer of virtualization and the need to emulate hardware

resources. Containers have lower overhead and higher performance, because they

share the host operating system's resources and do not require hardware

virtualization.

In summary, VMs provide full hardware-level

isolation and allow different operating systems to run on the same hardware,

while containers provide process-level isolation and are lighter weight, more

efficient, and better suited for running multiple instances of the same

application on the same hardware.

A Dockerfile and

Docker Compose are both tools used in the Docker ecosystem, but they serve

different purposes:

1. Dockerfile: A Dockerfile is a text file that contains

instructions for building a Docker image. The Dockerfile specifies the base

image to use, any additional software packages to install, and any

configuration to set up within the image. Once the Dockerfile is created, it

can be used to build a Docker image that includes all the necessary components

to run an application.

2. Docker Compose: Docker Compose is a tool for defining and

running multi-container Docker applications. Docker Compose uses a YAML file to

define the services that make up the application, along with any configuration

options needed for each service. With Docker Compose, you can define multiple

containers that work together to form an application, such as a web server, a

database, and a caching layer.

In summary, a

Dockerfile is used to create a Docker image, while Docker Compose is used to

orchestrate multiple containers and services as a single application. You can

use both tools together to create a complete, multi-container application that

can be easily built, deployed, and managed.

docker daemon

The Docker daemon is a background process that

runs on a host machine and manages the Docker runtime environment. The Docker

daemon is responsible for:

1.

Managing

Docker objects: The Docker daemon creates, manages, and deletes Docker objects

such as containers, images, volumes, and networks.

2.

Communicating

with the Docker client: The Docker daemon listens for requests from the Docker

client and responds by executing the requested actions, such as running a container

or building an image.

3.

Interacting

with the host operating system: The Docker daemon communicates with the host

operating system to manage the allocation of hardware resources such as CPU,

memory, and storage.

4.

Pulling and

pushing Docker images: The Docker daemon pulls images from Docker registries

such as Docker Hub, and pushes images to registries as well.

The Docker daemon is designed to be secure and

reliable, with built-in security features such as image verification and user

authentication. The Docker daemon can be configured through a variety of

settings and options, including networking, storage, and security settings.

Difference between docker swarm & Kubernetes

Docker Swarm and

Kubernetes are both container orchestration tools that allow developers to

manage and scale containerized applications, but they have some key

differences:

1. Architecture: Docker Swarm is a native clustering tool for

Docker containers, while Kubernetes is an open-source container orchestration

platform that can run on any cloud provider or infrastructure.

2. Scalability: Both tools can scale applications horizontally by

adding more instances of containers, but Kubernetes provides more advanced

scaling features, such as automatic scaling based on CPU and memory usage.

3. Ease of use: Docker Swarm is easier to set up and use than

Kubernetes, because it has fewer components and requires less configuration.

However, Kubernetes provides more advanced features and greater flexibility.

4. Networking: Docker Swarm uses a built-in overlay network for

container communication, while Kubernetes requires an external network plugin

for container communication.

5. Ecosystem: Kubernetes has a larger and more active ecosystem

than Docker Swarm, with a wider range of plugins, tools, and integrations

available.

In summary, Docker

Swarm is a simpler and more lightweight container orchestration tool that is

easy to use and provides basic scaling and management features, while

Kubernetes is a more powerful and flexible platform that is better suited for

large-scale, mission-critical applications that require advanced features and

high availability.

components of kubernetes

Kubernetes is an open-source container

orchestration platform that automates the deployment, scaling, and management

of containerized applications. The key components of Kubernetes include:

1.

Master

node: The master node is responsible for managing the Kubernetes cluster. It

controls the scheduling and deployment of containers on worker nodes.

2.

Worker

node: The worker node is a server that runs the containers for the

applications. It receives commands from the master node and runs the containers

accordingly.

3.

Pods: A pod

is the smallest unit of deployment in Kubernetes. It is a logical host for one

or more containers that share the same network namespace and can communicate

with each other using local host networking.

4.

Replication

controller: The replication controller ensures that a specified number of pods

are running at all times. It monitors the state of each pod and creates new

ones if any pod fails or is terminated.

5.

Service: A

service is an abstraction that provides a consistent way to access a set of

pods. It can load balance traffic across the pods and provide a stable IP

address and DNS name for the service.

6.

Volume: A

volume is a directory that is accessible to containers in a pod. It provides a

way to store and share data between containers in a pod.

7.

Namespace:

A namespace is a way to organize and isolate resources within a cluster. It

provides a virtual cluster within a physical cluster.

8.

ConfigMap

and Secret: These are Kubernetes resources that can be used to store

configuration data and sensitive information such as passwords or API keys.

9.

Deployment:

A deployment is a higher-level resource that provides declarative updates for

pods and ReplicaSets. It allows users to specify the desired state of the

application and Kubernetes will ensure that the state is maintained.

10.

StatefulSet:

A StatefulSet is a higher-level resource that provides a way to manage stateful

applications such as databases. It ensures that pods are created and scaled in

a predictable and consistent manner.

architecture of kubernetes

The architecture of Kubernetes is based on a

master-worker model, where the master node manages and controls the worker nodes.

The following is a high-level overview of the Kubernetes architecture:

1.

Master

node: The master node is responsible for managing the Kubernetes cluster. It

runs the Kubernetes API server, which provides a RESTful API for managing the

cluster. The API server is the central point of control for the cluster and is

responsible for receiving requests from users or other components of the

system, and making changes to the desired state of the cluster. The master node

also runs other important components, such as the etcd data store, the

controller manager, and the scheduler.

2.

Worker

node: The worker node is a server that runs the containers for the

applications. It receives commands from the master node and runs the containers

accordingly. Each worker node runs the Kubernetes node agent, which

communicates with the Kubernetes API server and manages the containers running

on that node.

3.

Pods: A pod

is the smallest unit of deployment in Kubernetes. It is a logical host for one

or more containers that share the same network namespace and can communicate

with each other using local host networking. Pods are scheduled onto worker

nodes by the Kubernetes scheduler, which takes into account factors such as

resource availability and affinity/anti-affinity rules.

4.

Controllers:

Controllers are responsible for ensuring that the desired state of the system

is maintained. There are several types of controllers in Kubernetes, including

the replication controller, the replica set, the deployment, and the stateful

set. Each of these controllers has a different role in managing the lifecycle

of pods and other resources.

5.

Services: A

service is an abstraction that provides a consistent way to access a set of

pods. It can load balance traffic across the pods and provide a stable IP

address and DNS name for the service. Services can be exposed to the outside

world through Kubernetes ingress, which provides a way to route traffic to the

correct service based on the URL path or host header.

6.

Volumes:

Volumes are used to provide persistent storage for containers running in pods.

Kubernetes supports several types of volumes, including hostPath, emptyDir,

configMap, secret, and persistent volume claims.

7.

Namespaces:

Namespaces provide a way to logically isolate resources within a cluster. Each

namespace has its own set of pods, services, and other resources, and can be

used to enforce access control policies or provide multitenancy.

Overall, the Kubernetes architecture is designed to

be highly scalable and resilient, with built-in support for high availability

and fault tolerance. The system is also extensible, with a large ecosystem of

plugins and extensions that can be used to customize its behavior and add new

functionality.

Services in kubernetes

In Kubernetes, a Service is an abstraction

that provides a stable IP address and DNS name for a set of Pods. It acts as a

load balancer, routing traffic to the Pods that make up the Service. Services

can be used to expose an application to external clients, or to provide

internal connectivity between microservices within a cluster.

The following are some key features of Kubernetes

Services:

1.

Load

balancing: Services distribute incoming network traffic evenly among the Pods

that make up the Service. This ensures that each Pod receives an equal share of

the traffic, improving application availability and performance.

2.

Service

discovery: Services provide a stable IP address and DNS name for a set of Pods.

This allows clients to connect to the Service without needing to know the IP

addresses of the individual Pods. Kubernetes also supports automatic DNS-based

service discovery, allowing Pods to discover and connect to other Services by

name.

3.

Session

affinity: Services can be configured to maintain session affinity, ensuring

that requests from a particular client are always routed to the same Pod. This

can be useful for applications that maintain client state, such as web

applications that use cookies.

4.

Service

types: Kubernetes supports several types of Services, including ClusterIP,

NodePort, and LoadBalancer. ClusterIP Services provide a stable IP address and

DNS name for internal access within a cluster. NodePort Services expose the

Service on a port across all nodes in the cluster, allowing external clients to

connect to the Service. LoadBalancer Services provide an external load balancer

for a Service, typically by integrating with a cloud provider's load balancing

service.

5.

Service

endpoints: Services maintain a list of endpoints, which are the IP addresses

and port numbers of the Pods that make up the Service. Endpoints are

dynamically updated as Pods are added or removed from the Service.

Overall, Services are a key component of

Kubernetes, providing a powerful and flexible way to expose and connect

microservices within a cluster. By abstracting away the underlying network

infrastructure, Services make it easy to build scalable and resilient

applications that can handle large volumes of traffic.

kubernetes cheatsheet

Sure! Here's a Kubernetes cheat sheet with

some of the most commonly used commands and concepts:

Kubernetes Objects:

- Pods: A single instance of

a running process in a cluster.

- Deployments: A declarative way to

manage Pods, including scaling and rolling updates.

- Services: An abstraction that

defines a logical set of Pods and a policy for accessing them.

- ConfigMaps: A way to decouple

configuration data from container images.

- Secrets: A way to store

sensitive information, such as passwords or API keys, securely in

Kubernetes.

Kubernetes Commands:

- kubectl create: Create a Kubernetes